SEO for AI: How to Make Your Product Discoverable by LLMs

How No-Code Sites and Substack Posts Can Become Invisible to AI (Unless You Fix This)

How often do you use Google Search?

Now, how often do you find yourself searching via ChatGPT, Perplexity, Claude, or Gemini?

I’ll never forget the moment I casually asked ChatGPT, “Is there any tool that does analytics on all Substack newsletters?”

And there it was, in the answer, my own scrappy little app. A project I’d barely promoted. It had a few dozens of users, tops. Yet somehow, ChatGPT knew about it.

My first reaction? Excitement.

My second? Utter confusion.

How did an AI, supposedly trained on data up to a certain cutoff, find my obscure tool? Why my app? What had I unknowingly done right?

As a builder, part of my launch checklist has sometimes included basic SEO, write good copy, submit to directories, share on socials. But this moment cracked open a bigger question: how do you actually make something searchable by AI today?

I started wondering:

How does AI search work?

Does traditional SEO still apply?

What about my writing on Substack or Medium—can that be discovered by AI tools?

Are the AI-powered website builders and no-code platforms helping or hurting discoverability?

That surprising ChatGPT result sent me down a rabbit hole. I started dig into months of experiments, app launches, and figuring out how AI systems actually index and recall content.

This article is the result of that deep dive.

It’s a long one, so feel free to jump to what interests you most:

How AI Search Actually Works

Why AI Can’t See Your Web App—And How to Fix It

Optimizing Your Substack and Blog Posts for AI Discoverability

1. How AI Search Actually Works?

We’ve grown used to Google returning a list of links. But AI tools like ChatGPT, Perplexity, and Claude don’t just search, they answer. And how they do that is fundamentally different.

Let’s break down how AI search actually works, and what it means for making your content findable.

1.1 The RAG System Behind Every AI Answer

At the core of most AI search engines lies a concept called Retrieval-Augmented Generation (RAG). If you’ve read my earlier article on the topic, this will sound familiar.

Think:

LLM = Expert with broad knowledge

Retriever = Research Assistant fetching current, relevant information on demand

When you ask a question, RAG kicks in:

The retriever fetches "relevant" pages based on semantic similarity

The filter determines which sources are "trustworthy"

The generator synthesizes an answer using that information.

You’ve seen this in action when Perplexity cited a niche blog post, or ChatGPT with browsing dug up something obscure in real time.

The key insight for builders: RAG systems don't just find your content—they decide if it's worth citing. Understanding this pipeline helps you optimize for each stage.

1.2 AI Doesn’t Crawl the Web Like Google—It Borrows

One of the most surprising things I learned while digging into AI search? Most AI tools don’t actually crawl the entire internet themselves.

They borrow.

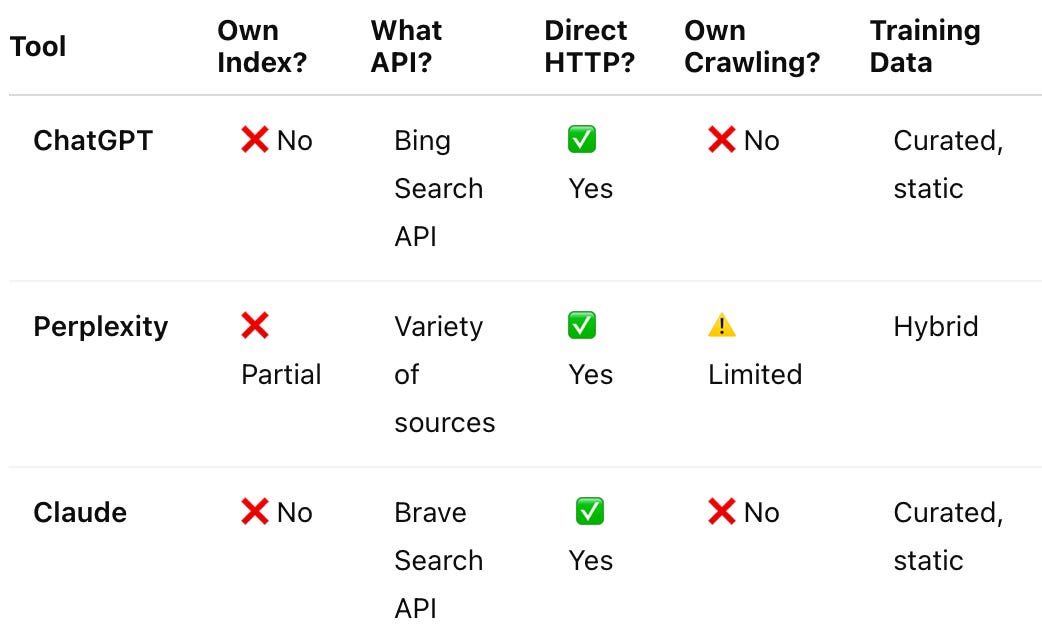

Here’s a comparison on how a few popular tools discover and retrieve data:

🧩 ChatGPT (with Browsing)

Uses Bing’s search index. It doesn’t crawl; it borrows from Microsoft’s infrastructure.🧠 Perplexity

A hybrid model. Part Bing index, part lightweight direct crawling, plus its own re-ranking logic. It pulls, filters, and cites in real time—great for recent and long-tail content.☁️ Claude

Relies on curated datasets and may query external APIs—but it does not crawl the open web directly.(Gemini)

Powered by Google’s internal infrastructure—different class altogether, not compared directly here.

Takeaway: If your content isn’t indexed by Google, Bing or another partner, it’s likely invisible to these AI systems. They’re not finding you unless someone else already has.

Turns out I’m not just lucky, broader data reflects it too. In a recent SEO study analyzing 10,000 finance/SaaS query-answer pairs, there was a strong correlation (~0.65) between websites ranking on Google’s first page and being mentioned in LLM-generated responses, while Bing showed ~0.5–0.6 correlation. Another analysis of 400+ high-intent keywords found that ranking in Google’s top 3 resulted in a 67–82% chance of being cited by AI tools like ChatGPT and Perplexity. That’s not anecdote; it’s systematic impact.

1.3 The Infrastructure Powering This New Era

So what happens after retrieval? AI search tools don't just return a list of links. They orchestrate a complex pipeline behind the scenes to produce that polished answer.

Let’s take Perplexity as a lens, since they’ve been relatively transparent about their system.

When you ask a question, Perplexity doesn’t just run a single search and return a few links. It spins up what’s basically a multi-agent team behind the scenes:

🧭 Planning Agent – figures out how to break your query into smaller pieces or follow-up searches.

🔍 Search Agents – run live queries across APIs like Bing, Brave Search, Google, and even niche sources like Tavily.

📊 Filtering Agents – review the results, sort them by semantic relevance, and discard low-signal noise.

✍️ Generation Agent – finally, this one synthesizes everything into a coherent, conversational answer—with citations.

All of this happens in seconds. And the entire process is powered by:

Massive GPU clusters

Vector databases that store semantic embeddings

API connectors to real-time sources

Multi-agent planning logic to handle conversation context

It’s not just a chatbot sitting on top of Google, it’s a mini research team, operating multiple systems at compute scale.

1.4 Why AI Search is So Different (and So Expensive)

Let’s compare the two approaches:

Think of it this way:

Google is like flipping through a card catalog.

AI is like asking a smart librarian: “What’s the most overlooked book on this topic?”

Why It Costs More

Semantic Matching

LLMs convert your query into multi-dimensional vectors and search for meaning, not keywords. More accurate, but compute-heavy.Conversational Memory

Follow-up queries are contextual (“How about for freelancers?”), which adds complexity and state management.GPU Infrastructure

AI search runs on expensive, parallelized compute. That’s why features like browsing often sit behind paywalls.

AI search isn’t just a better version of Google. It’s a whole new system, with new rules, new tech, and new expectations for content visibility.

2. Why AI Can’t See Your Web App—And How to Fix It

2.1 How My Web Apps Are Doing

I’ve built a few tools over the year, three I maintain most actively:

QuickViralNodes – early Flask app with basic HTML and SSR

SubstackExplorer – newer, built with Next.js and server-rendered routes

Personal website – built with an AI site builder (Lovable), hosted as a client-rendered React SPA

Despite being newer and more polished, my personal site couldn’t be found by ChatGPT, even when I explicitly gave it the URL.

That made something click:

If the older projects were searchable and the newer one wasn’t, maybe it wasn’t about quality, but how the content was made.

🔎 SSR vs CSR: What AI Bots Can Actually See

AI crawlers do not execute JavaScript. Unlike Googlebot, which renders JavaScript-heavy SPAs, tools like GPTBot and ClaudeBot rely mostly on static HTML. [1, 2, 3]

Both QuickViralNodes and SubstackExplorer were built with SSR. Even though they looked rougher, they produced fully-rendered HTML—perfect for AI crawlers.

My personal site, however, used CSR via a React SPA. While blazing fast for users, it presented a JS-only shell to AI bots. No text. No metadata. No chance of being indexed.

The irony? The simpler, more old-school projects were better optimized for the AI era.

2.2 What Stacks Do AI Dev Tools Use?

The Hidden Bias in AI Code Generators

In my experience, most AI dev tools—Lovable, Bolt, V0, Replit, Gemini…—default to React-based frameworks. That’s not an accident.

These platforms optimize for fast output and familiar scaffolding, not crawler visibility.

Here’s why React/Next.js is their default:

React is well-documented and AI-trainable (lots of public code)

Next.js supports modularity, routing, and deployment with minimal config

Most AI-generated code “just works” in React environments

But here’s the trap: unless SSR is explicitly enabled, many of these projects default to client-side rendering. Fast to ship, invisible to AI.

🔧 Platform-by-Platform Breakdown

Be ware that, if you’re using one of these tools, or planning to build with AI’s help: You’re very likely getting a React-first project.

2.3 How to Build For AI Discoverability

Server-rendered HTML, structured content, and a few SEO tricks still matter more than you'd think. Whether you're retrofitting an AI-generated site or building from scratch, these strategies will make your content findable by AI systems.

1. Render for Bots, Not Just Browsers

As detailed in section 2.2, AI crawlers don’t behave like Chrome. They fetch the raw HTML and parse it, quickly.

✅ What to do:

Use server-side rendering (SSR) for dynamic pages

Or static site generation (SSG) for blog/docs-style content

Avoid full client-side rendering (CSR) unless you're rendering supplemental content

Test with

curl https://yoursite.com— if meaningful content isn’t in that output, AI bots won’t see it either

2. Embed Structured Data in HTML (JSON-LD)

Structured data helps LLMs understand what your site contains, not just what it says. Think semantic tagging for machines

✅ What to do:

Use JSON-LD blocks directly in HTML

Use schemas like:

Article,FAQPage,Product,Organization,PersonLink entities with

@graphwhere relevant (helps build semantic context)

// Minimal JSON-LD example, insert this into your HTML <head>:

<script type="application/ld+json">

{

"@context": "https://schema.org",

"@type": "Article",

"headline": "Making Your Content Discoverable by AI",

...

}

</script>3. Format Content for Readability (by Humans and AI)

LLMs love structure. So do humans.

✅ What to do:

Use

<h1>,<h2>,<h3>in logical hierarchyStart pages with concise, clear summaries

Use bullet points, numbered steps, bolded facts

Avoid large walls of text or overly interactive interfaces without fallback content

Basically: structure in a way that’s skimmable. If it looks like something you’d copy-paste into a Notion doc or summarize for a friend, it’s probably LLM-friendly too.

4. Guide the Crawlers With robots.txt, sitemap.xml, and llms.txt

You still need the basics: let the right bots in, and show them where to go.

✅ Checklist:

robots.txt→ allow trusted bots (e.g. PerplexityBot, ClaudeBot)sitemap.xml→ submit to Google and Bingllms.txt(emerging) → provide LLM-friendly summaries and important URLs

# llms.txt - LLM metadata hints

version: 1.0

website: https://yourdomain.com

sitemap: https://yourdomain.com/sitemap.xml

summary_ai_seo_guide: >

Guide on optimizing websites and content for AI-powered search tools.

allow: GPTBot, ClaudeBot, PerplexityBot5. Optimize Performance

AI bots have short timeouts. If your content takes 2 seconds to render, you’ve already lost.

✅ Tips:

Optimize performance: minimize render-blocking scripts, compress assets

Use semantic HTML and ARIA labels for accessibility

Avoid deep nesting and long URL query strings, keep URLs clean and shallow

6. Get Indexed Where AI Looks

As discussed in section 1.2: AI tools discover your site through Bing and Google indexes. If you’re not showing up there, you’re likely invisible to them.

✅ Do the fundamentals:

Make sure your public pages are crawlable and indexable

Use descriptive page titles and meta descriptions

Ensure your content is unique, helpful, and targets real questions

Platforms like Substack and Medium already do some of this for you, but for your own sites, you’ll want to be intentional.

7. Build Trust Through Author and Brand Signals

LLMs pull context from your info pages and bylines.

✅ What to include:

An About page with your background, mission, or product story

Bylines that match your public profiles (LinkedIn, X, etc.)

Descriptive alt text for images, logos, and bios

When the AI knows who you are, it’s more likely to cite you confidently.

8. Build External and Internal Links

AI tools value popularity and relevance, just like traditional SEO.

✅ Earn authority by:

Link between related pages on your site

Get referenced on niche directories, forums, and blogs

Promote your content in newsletters, social media, or via backlinks from trusted sources

AI may not “count” every metric exactly the way Google does, but trust flows the same way.

This isn’t just SEO 2.0, it’s a shift in how content is discovered and surfaced.

In the age of AI, your goal isn’t just to rank, it’s to be retrievable, understandable, and quotable by systems that scan, synthesize, and serve your content in milliseconds.

2.4 What I Changed in the End

After seeing how rendering and structure impacted visibility, I did a full audit of my sites:

✅ Added

llms.txtandrobots.txtfor LLM friendliness✅ Submitted

sitemap.xmlto Bing + Google✅ Optimized my personal site by pre-populating more static information

✅ Embedded structured metadata and improved heading structure

✅ Made all important pages testable via raw HTML (curl-ready)

Now, they’re not just pretty (biased on personal opinions), they’re findable.

3. Optimizing Your Substack and Blog Posts for AI Discoverability

Not every builder wants, or needs, to manage a custom-coded site. If your writing lives entirely on Substack, you still have meaningful control over how discoverable your content is to AI systems.

The good news? Substack is more AI-friendly than you think.

The bad news? Just hitting “Publish” isn’t enough.

Let’s break down how to structure and tune your Substack so that AI models can find, parse, and quote your work.

Traditional SEO in the Age of AI

’s article "The Complete Guide to Optimizing Your Substack for SEO" is an excellent breakdown of classic SEO strategies adapted for Substack. Here’s a summary of his core advice:These SEO tactics remain foundational—even in an AI context—because AI tools are built on top of search infrastructure.

AI-Specific Optimizations

While traditional SEO helps you get indexed, these additional strategies ensure your content is usable and quotable by AI systems.

1. Make Your Posts Public

AI crawlers like GPTBot, ClaudeBot, and PerplexityBot do not authenticate. If your post is behind a paywall or subscriber login, it’s invisible.

What to do:

Keep evergreen or informative posts free and public.

Publish to web in addition to email.

2. Leverage Your RSS Feed

Substack automatically generates a full-text RSS feed at yourname.substack.com/feed. AI systems like Perplexity and Claude use these feeds to ingest and evaluate your content.

What to do:

Ensure the first paragraph has meaningful context (not just an image or intro fluff).

Avoid “click to read more” formats that strip context.

3. Write AI-Readable Summaries

The first 1–2 paragraphs of your post often serve as the semantic anchor for how LLMs embed and recall your content.

What to do:

Lead with a sharp summary or question.

Include key entities, concepts, and verbs early.

4. Cluster Related Posts

AI systems benefit from content relationships. Interlinking related posts creates semantic groupings that LLMs use to associate context.

What to do:

Reference past posts using consistent language.

Build topic hubs or resource pages.

Use “Further reading” callouts or in-text links.

5. Use Consistent Terms and Entities

If you alternate between “AI SEO,” “LLM optimization,” and “semantic content strategy,” LLMs may not connect them.

What to do:

Use consistent terminology for recurring themes.

Use full names or canonical terms for people, tools, and frameworks.

6. Check with curl or “View Source”

Even though Substack renders nicely in browsers, AI bots only see the raw HTML. If the content isn’t there without JavaScript, it doesn’t exist to them.

What to do:

Run

curl https://yourpost.substack.comand check the output.Make sure all core content is visible in plain text.

7. Stay Active and Cited

LLMs favor content that’s surfaced repeatedly, cited externally, or linked from other trusted sources. Inactivity can push you out of the AI memory window.

What to do:

Publish regularly, even short updates.

Promote externally (Reddit, X, community posts).

Get others to quote, link, or recommend your content.

My Own Post-Publication Audit

After pulling together this checklist, I turned it back on myself:

I checked my RSS feed and verified that all my public posts were truly public, especially the evergreen ones.

I flagged several older posts to rewrite their intros and summaries so they’d better anchor AI embeddings.

I made a to-do list to revise headings and interlink related pieces using consistent phrasing.

I ran a

curlrequest onhttps://jennyouyang.substack.com/—and was happy to see rich, parseable HTML.I am also cross-posting some Substack pieces to Medium and other public platforms, adding more pathways for indexing and citation.

It struck me: this is one of those posts that didn’t just take time to write—it took time to tidy up afterward.

Because in the AI era, publishing is just the first draft of discoverability.

Final Thoughts: The New Visibility Game

This journey started with a surprising ChatGPT result, but it led me to something much larger:

We’re no longer just building apps and writing content. We’re building answers.

In the AI era, visibility isn’t about keywords or virality. It’s about being structurally compatible with how machines perceive and retrieve meaning.

LLMs don’t “browse.” They synthesize.

They don’t “index the internet.” They selectively ingest structured clarity.

That changes everything.

App Design and Content Creation Are Converging

In the past, writing was about persuasion and aesthetics. Building was about functionality.

But AI flattens that distinction.

Your Substack post and your app landing page are now processed by the same systems, judged by the same logic:

Is it structured?

Is it connected to trusted sources?

Does it load fast?

Can it be parsed, cited, reused?

Everything is content. Everything is a data object.

If your code, copy, or design isn’t legible to LLMs, it might not exist to them.

AI Rewards Discipline, Not Hacks

This isn’t another growth hack moment.

The creators winning in AI discovery today aren’t always the loudest or the most polished. They’re the ones who:

Structured their content cleanly

Built with semantic clarity

Published consistently

Linked generously

Used open standards

AI systems reward the people who did the boring things right all along.

This flips the usual builder culture on its head. Shiny launches and viral threads help with social proof, but AI only sees what’s well-formed and well-connected.

In this new game, discipline > spectacle.

But here's what's really happening: when AI finds your work, it doesn't just discover it—it legitimizes it. Being cited by ChatGPT or Perplexity isn't just traffic. It's authority.

The New Economics

AI search changes who captures value. When Perplexity synthesizes your content into an answer, users get what they need without visiting your site. You're not just competing for clicks anymore—you're competing to be the source AI systems trust and cite.

The winners won't necessarily be the best products. They'll be the products that speak AI's language.

So, What Now?

Take the boring steps.

Write for meaning, not just for flair.

View source on your own site.

Test your homepage.

Run your blog through Perplexity.

You can’t just launch anymore. You have to surface.

The AI layer doesn’t care how good your content is—if it can’t read it, it doesn’t exist.

So ask yourself one last thing:

Is your site ready to be someone’s answer?

I’ve been hearing vaguely about LEO (LLM Engine Optimization), I thought there was no secret to it but what you just described explains that there is a recipe to actually improve website presence to AI. I think this is a really nice breakdown, and I cannot wait to implement some of this, especially by starting to uploade my sitemap to Bing for sure, thanks Jenny!

Impressive overview, Jenny! I'm not adept at programming, but this was pretty straightforward to follow. It appears that AI favors well-written, well-structured writing that has more depth and more "digestable" elements that human readers also like.

It also sounds like knowing how to make clear, concise overviews and strong hooks are going to help a lot outside of the backend work that needs to be done.

I've noticed in my own AI tinkering that it has referenced a lot of my Medium work when I ask it something that I'm reflecting on so there's another reason to consider not ditching Medium altogether.